Master your GPU: How to measure the temperature and consumption of a graphics card 📈💻.

In another publication we told how measure the temperature of a processor In a true way, and we have also seen why it was advisable to do so. This guide was received quite well, and for this reason we decided to share with you a version of it that will be focused on the graphics card, an ingredient that is also very sensitive to working temperatures.

In this new guide, we will see how we can measure the temperature of a graphics card, but in a real way. 🛠️ Also, we will explain how the measurement can change depending on the workload, and we will tell you why it is important to put the temperature value into context with the consumption of such an ingredient.

Before getting into the subject, I remind you that each graphics card can record temperature values and consumption, and this does not have to be a problem. In fact, it is the most common. A more powerful graphics card will generally have higher working temperatures and consumption than one that is less powerful. 🔥

In the end, the important thing is that the recorded measurements are normal within the average values of the model we are using. For example, a GeForce RTX 3050 can record average temperatures of 66 degrees and consumption of 126 watts, as we have seen at the time in our analysis. While a RTX 3080 Ti It can reach 80 degrees and record a consumption of 349 watts.

There is an essential difference between the two graphics cards, but they are completely normal, since the first is a standard quality model and the second is a high-end model. high range offering much more performance. 🚀 What would not be usual is for the RTX 3050 to have higher temperatures than the RTX 3080 Ti.

What does it really mean to measure the temperature and consumption of a graphics card? 🤔

It's simple: we need to do it using a workload that pushes the card to 100% of usage. It's the same as with a processor; if we only use it at 50%, we won't get realistic data on its temperatures or consumption, since it will be underutilized. It wouldn't be the first time that someone says that their graphics card only reaches 50 degrees, and then it turns out that that is the value at rest, with the fans turned off. 😅

It is also common to see cases of users who measure with old games where the use of the GPU does not exceed 50% or 60%. To measure temperature and consumption in a real way, we must use apps or games that take the graphics card to the maximum. 💪 The values we obtain represent the maximums that it can record when used at full capacity. In addition, we must make the measurement for a minimum time so that the graphics card reaches its maximum peak temperature.

If we just open a game and run it for a minute, it will be difficult to realistically measure the temperature, since on the desktop Windows The card is at low levels and gradually starts to rise when running a performance game or app. The graphics card does not go from 40 degrees at idle to 80 degrees in seconds under heavy load. Ideally, you should spend at least 30 minutes on the test to realistically measure the temperature. 🔥

If we do short tests, it is advisable to repeat them at least three times to obtain a real measurement. In my case, when doing short tests, performanceI avoid the predefined benchmarks of some games and focus on going through the same scene doing different actions and eliminating enemies. This creates a real workload and allows for proper temperature and consumption measurements. 🎮

Apps to measure the temperature and consumption of a graphics card

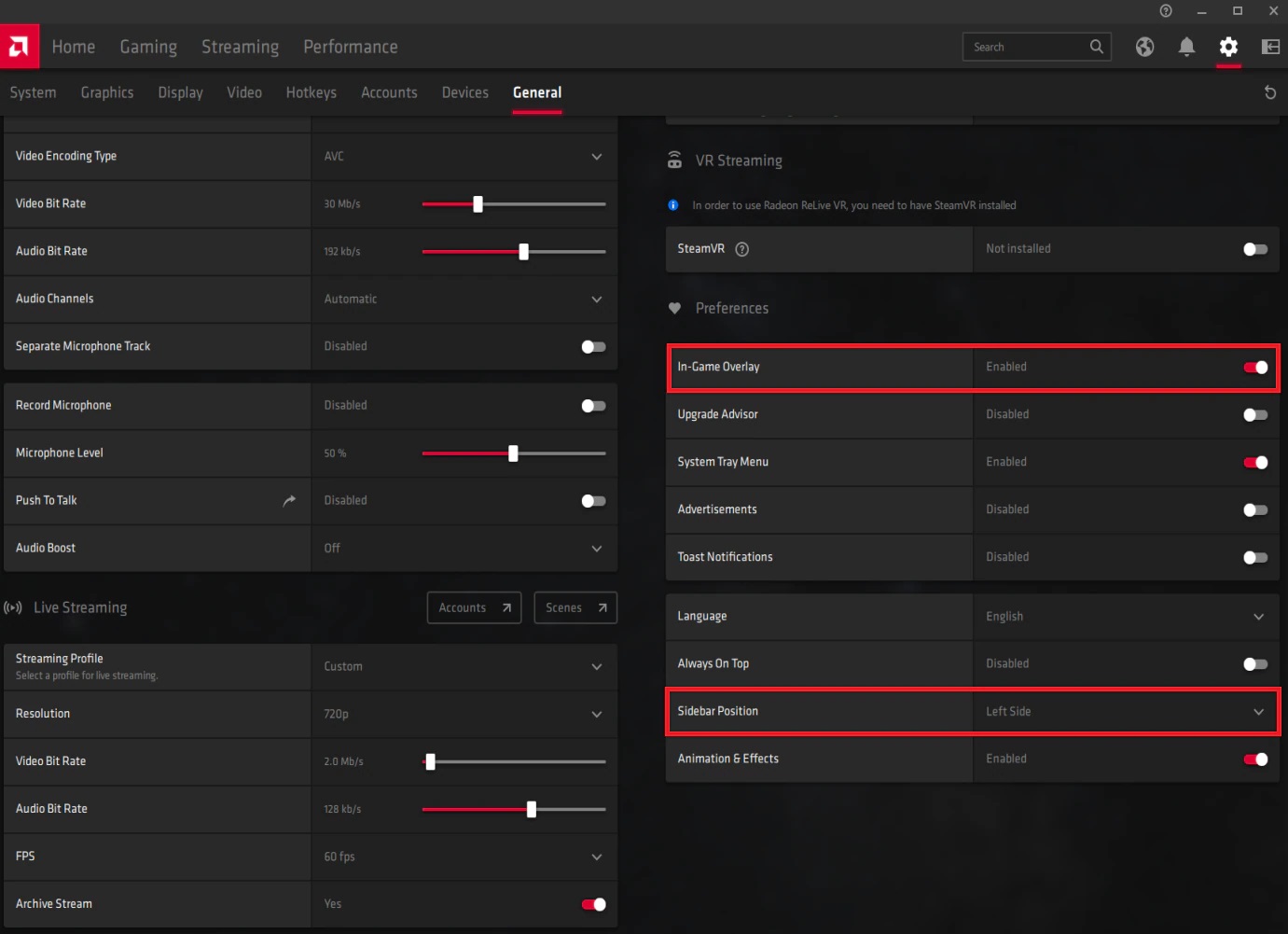

🎮 So much AMD as NVIDIA They offer a tool that we can download along with their drivers, and it works like a charm. It can be activated very easily. Both developers include an overlay that we can activate while playing, and through which we can choose which measurements of performance we want to see. This tool comes with the Radeon Adrenalin program, and in the case of NVIDIA with GeForce Experience.

🔥 In the case of AMD, we can activate the full interface by pressing «Alt + R», and if we only want the menu to be displayed as a sidebar, we should use «Alt + Z». For those who use a graphic card NVIDIA, the commands are «Alt + Z» to open the full interface, where we can also choose whether we want to see more or fewer measurements and the location of the overlay, and «Alt + R» if we want to directly activate the measurement overlay.

📊 With both tools we can measure the temperature and consumption of the graphics card, but also its use and even other important characteristics, such as working frequencies and power usage. GPU. All of this will give you the context you need to make a completely accurate measurement that is contextualized to the workload you are using.

🔧 Additionally, you can use other tools, such as MSI Afterburner, but given how complete and fast the overlay offered by AMD and NVIDIA is, I don't find that app necessary. In fact, I only use it for average performance measurements on specific occasions.

Tips for measuring the temperature and consumption of a graphics card in a real way

In such a case, I would directly avoid synthetic tests and go for a few games rigorous 🎮. It is a good idea to test with several games, because we will be able to make a comparison and notice possible discrepancies, and also because we will be able to see how our graphics card behaves at two different levels. If we also test titles that use technologies different and that take our graphics card to the maximum, such as ray tracing, the better! ✨

I tell you this because when performing performance tests I have detected relatively large differences on more than one occasion. These differences were generated between titles that used technologies and different settings. For example, enabling DLSS can reduce temperatures and also GPU usage, while enabling ray tracing can have the opposite effect, as it puts a greater load on our graphics card 🔥.

When you are about to measure the temperature and consumption of your graphics card, keep all of the above in mind, and maintain a single, consistent pattern that aims to take said component to the maximum 🔥.

To achieve this, make sure that it is always between 99% and 100% in use. If you do not reach these values in the games you are using, check that you have set them to maximum quality and with the highest resolution possible 📈, and move around in areas where intense action is generated 🎮.

To make everything we have said easier, and so that you have a general reference, I now leave you with the key guidelines that you should follow to measure temperature and consumption correctly:

- Play demanding next-gen games 🚀.

- Avoid scheduled benchmarks and play real situations with a significant load ⚔️.

- Set your games to maximum, and with the highest resolution possible 🖥️.

- Play for 30 minutes, so that the temperature can reach its maximum peak ⏱️.

- Make sure you have the latest installed drivers available 🔄.

The temperature should stabilize after a few minutes of play, and so should the consumption. The profile of the fans It should be set to a reasonable level, so that it doesn't look like a plane about to take off ✈️.

How to interpret the results and why it is good to make this type of measurements

👀 At first glance there are no secrets, the temperature values we obtain will reflect the maximum that our graphics card can reach. So far so good, but are these values normal or could they indicate some kind of problem? That is the key to making a measurement of this type: knowing if everything is in order. order Or if, on the contrary, we should be worried. 🤔

In order to understand and interpret these values, we must understand what the normal range of temperatures and consumption of our graphic cardHowever, we must also consider that these values may vary depending on certain key factors:

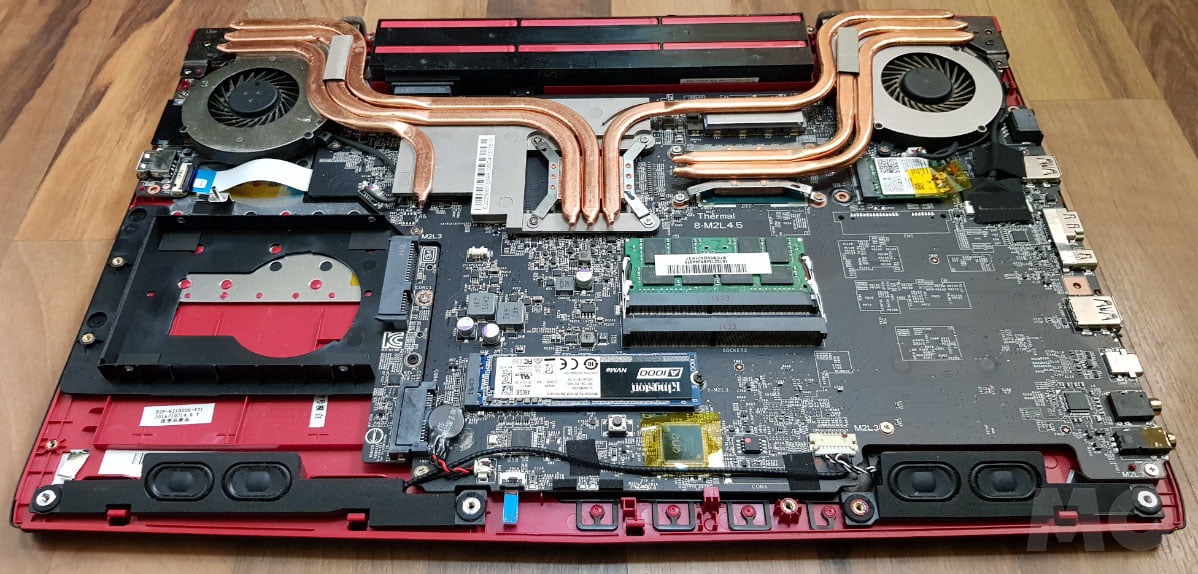

- 🔧 Cooling system design and quality: A model with a higher quality system can operate at a significantly lower temperature.

- ⚡ Factory overclocking and increased consumption: Some models come with a higher speed from the factory and therefore have a higher consumption, which can also cause them to reach higher temperatures.

🤔 With the previous in mind, it is obvious that a graphics card with a simple cooling system will have much higher temperatures than one with an advanced cooling system.

It's not something you should worry about, as long as the difference is reasonable and the values remain at a normal level. This brings us to another question, what is the usual level? 🤷♂️ It's a complex question, since I can't give specific values for each model, but I can share some data that will serve as a reference:

- 💻 Low end graphics cards range and low-mid range: A good example is the Radeon RX 6500 XT. They typically stay between 50 and 60 degrees, and their consumption is very low, around 100 watts.

- 🖥️ Standard quality graphics cards: There is a wide variety in this range, but the most common is that they stay between 60 and 70 degrees. Getting closer to 80 degrees would not be a real problem. Consumptions usually fluctuate between 120 and 180 watts.

- 🎮 High-end graphics cards: There is also a lot of variety, but ideally they should not exceed 75 to 80 degrees. Consumption can vary greatly depending on the capacity of each graphics card, but they usually range between 200 and 500 watts.

Based on all the information we saw, it is very normal that a GeForce RTX 3080 Ti Founders Edition register 80 degrees After an hour of playing Cyberpunk 2077 with maximum quality and ray tracing, and that its average consumption around the 350 watts. 🔥 However, it would not be usual for a GeForce RTX 3070 Founders Edition record those values; this should shift by about 73 degrees and a consumption of 220-240 watts. ⚡

We now launch into the last question, why is it good to measure the temperature and consumption of a graphics card in a real way? Because it is very simple, because will let us check that everything is in order, that is, our graphics card does not have problems with excess temperature, that it receives the nutrition it needs and that it does not have problems with security.

It is essential to take the graphics card to the maximum, to that 100% use, and maintain it for at least 30 minutes because in this way it will be supporting a workload that will really be a challenge for the system, that is, both for the graphics card and our power supply. If there is any problem, it will come to light, we will be able to identify it and take the necessary measures to solve it.

We now launch into the last question, why is it good to measure the temperature and consumption of a graphics card in a real way? 🤔 Well, it is very simple, since it will allow us to verify that everything is in order. This means that our graphics card does not have problems with excess temperature, that it receives the power it needs and that it does not present problems with security. 🔍

It is crucial to take the graphics card to the maximum, that is, to that 100% of use, and maintain it for at least 30 minutes, since in this way it will be supporting a workload that will really represent a challenge for the system. This includes both the graphics card and our power supply. ⚡

If there is any problem, it will be brought to light. We will be able to identify it and take the necessary steps to resolve it. 🛠️

For example, if you computer hangs with normal temperatures 🌡️, the first thing you should check is the power supply. On the other hand, if the temperatures are extremely high 🔥, the problem could be in the refrigeration system or the airflow inside your PC chassis.

In the first case, it would be essential change the font to prevent it from getting damaged and affecting other components. In the second case, you should make sure that the graphics card fans are working properly, that everything is clean, that the thermal paste is in good condition and that the air flow of your team is adequate. 🛠️

As I mentioned at the time when we talked about measuring the temperature of a central unit of prosecution, do not be alarmed if the values of your graphic card 📈 are higher than those seen by other users on the Internet. 🌐 These values were not always obtained from realistic measurements, and we have no guarantee that they are accurate. The important thing is that your graphics card remains constantly within those values that we can consider normal. 💻