Windows 128-bit: The mystery that experts aren't telling you 🤯

Operating systems and processors have undergone a fascinating evolution over the decades. From 8-bit architectures in the 1980s, through 16-bit and 32-bit architectures in the 1990s, to the dominant arrival of 64-bit architectures in the first decade of the 2000s. It has been more than 20 years since the first 64-bit Windows for consumers appeared, Windows XP Professional x64 EditionBut why isn't there a 128-bit version of Windows yet? 🤔

To answer this question, it's essential to understand how operating systems interact with processors and their bit capabilities. For example, a 64-bit operating system requires a 64-bit processor to function correctly. Thus, for a 128-bit Windows we would need a compatible 128-bit processor.

Windows Vista 64-bit, a milestone in the evolution of operating systems.

64-bit processors and operating systems: a historical example

In 2006, Microsoft released the update that made Windows Vista a 64-bit operating system. However, at that time, 64-bit compatible processors were only just beginning to enter the mass market. AMD pioneered this in 2003 with its Athlon 64 processorsIntel followed in 2004 with its 64-bit Pentium 4.

This shows that Before you can have a 128-bit operating system, you must first have a 128-bit processorCreating software that cannot run on existing hardware is neither practical nor efficient. At the time, 64-bit processors paved the way for Windows Vista and other systems to support this architecture.

While Windows XP Professional x64 Edition was the first 64-bit compatible professional consumer operating system, released in 2005, It was Windows Vista 64-bit that popularized this technology in the general market.

The great leap: from megabytes to gigabytes of RAM

The main reason we've increased the number of bits in operating systems is the need to handle larger amounts of RAMApplications and video games have evolved towards increasingly demanding requirements, demanding greater resources.

In the 1980s, having 8 MB of RAM It was sufficient for an optimal experience. In the 90s, the 32 MB They were standard for basic tasks. Between 2000 and 2010, the need for memory skyrocketed: we went from 128 MB to 4 GB, multiplying by 40 the capacity required in a standard PC. This leap was key to the adoption of 64-bit systems like Windows Vista.

Since then, growth has stabilized. For example, the 8 GB of RAM They began to become widespread in 2012 and, even in 2025, they are still sufficient for many everyday tasks and games.

Features and advantages of Windows and 64-bit processors

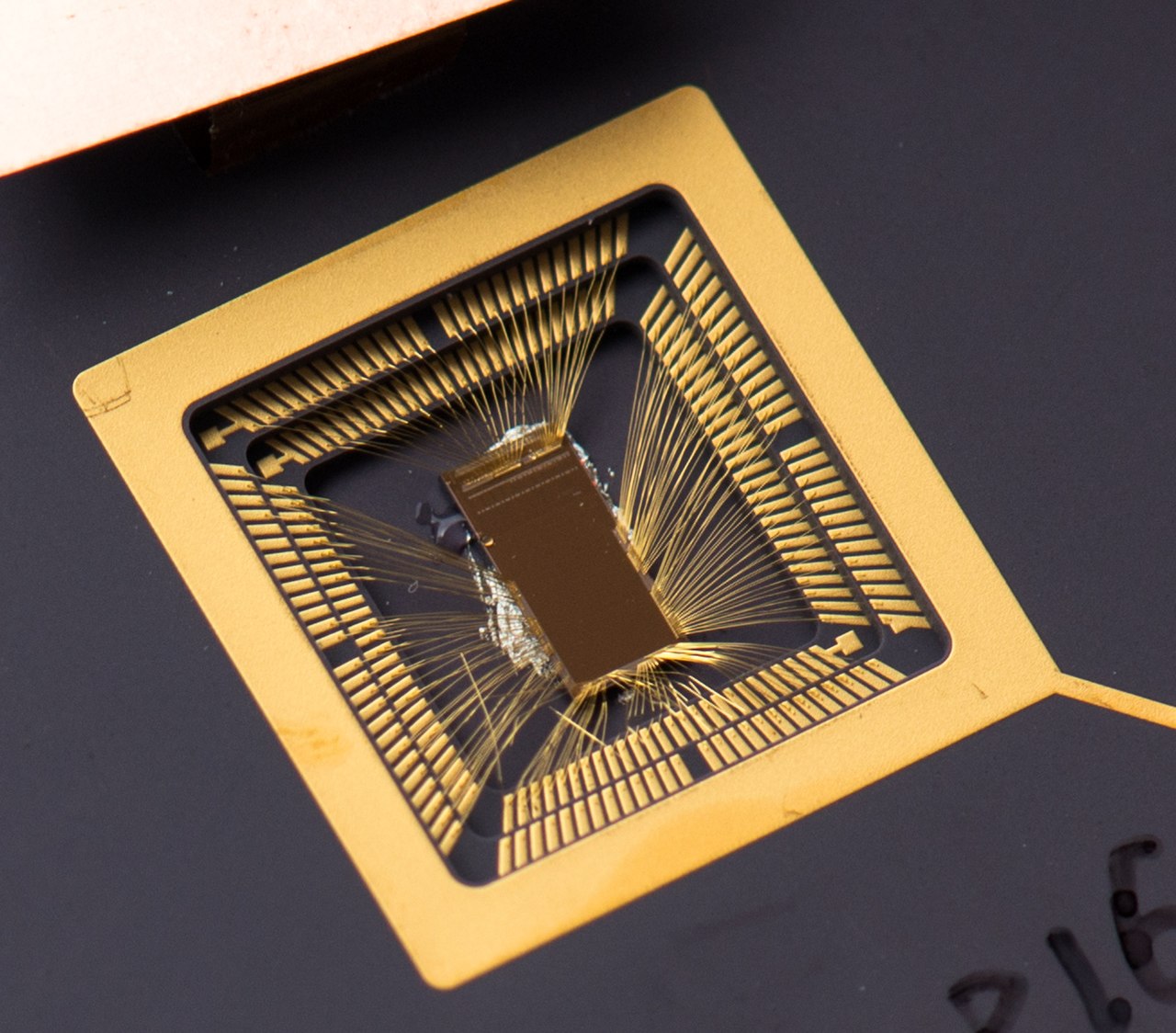

64-bit Windows marked a turning point in home computing. An operating system is based on blocks of data whose size depends directly on their number of bits. The greater the number of bits, the larger the block size and the more addressable RAM.

This is the typical relationship between bits and memory maximum supported:

- 8-bit operating systems: up to 256 bytes of RAM.

- 16-bit operating systems: up to 64 KB of RAM.

- 32-bit operating systems: up to 4 GB of RAM.

- 64-bit operating systems: up to 18 exabytes of RAM.

To better understand the units, remember that:

- 1 KB = 1.024 bytes

- 1 MB = 1.024 KB

- 1 GB = 1.024 MB

- 1TB = 1,024GB

- 1 PB = 1.024 TB

- 1 EB = 1.024 PB

This jump from 4GB to 18 exabytes is theoretically revolutionary for memory management, supporting more complex tasks, advanced applications, and graphics-intensive games that simply couldn't run on 32-bit systems.

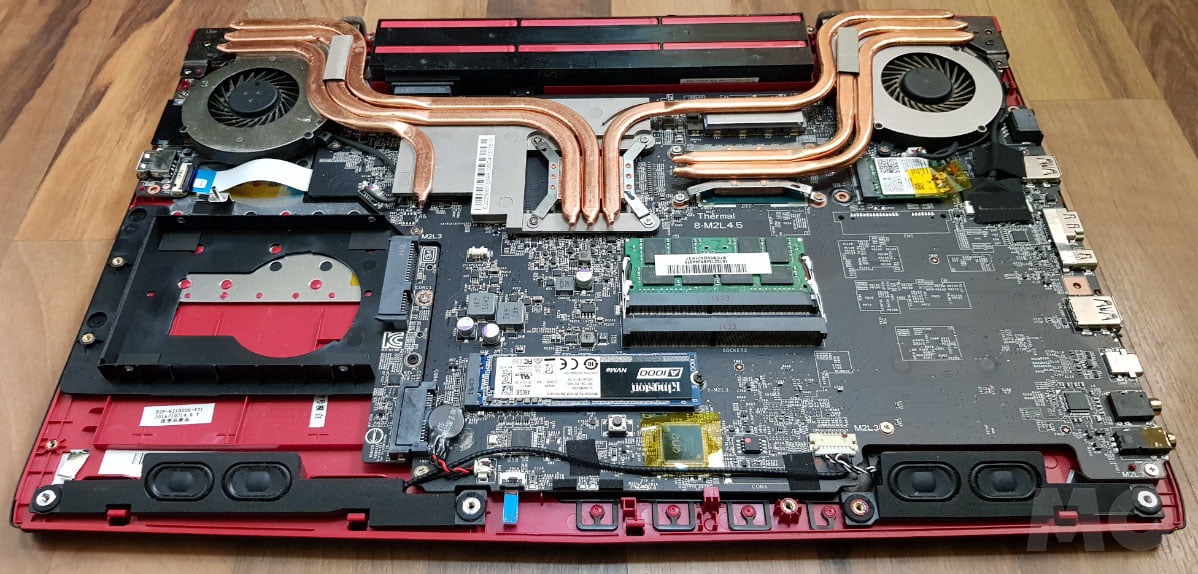

A computer with 64 GB of RAM, impossible on 32-bit operating systems.

Furthermore, 64-bit processors can process data in 64-bit blocks per clock cycle, significantly improving performance in complex calculations and operations. They incorporate advanced security features, such as Data Execution Prevention (DEP)Kernel Patch Protection and integrity verification using checksums strengthen the system against threats. The removal of support for 16-bit subsystems also increased protection against legacy vulnerabilities.

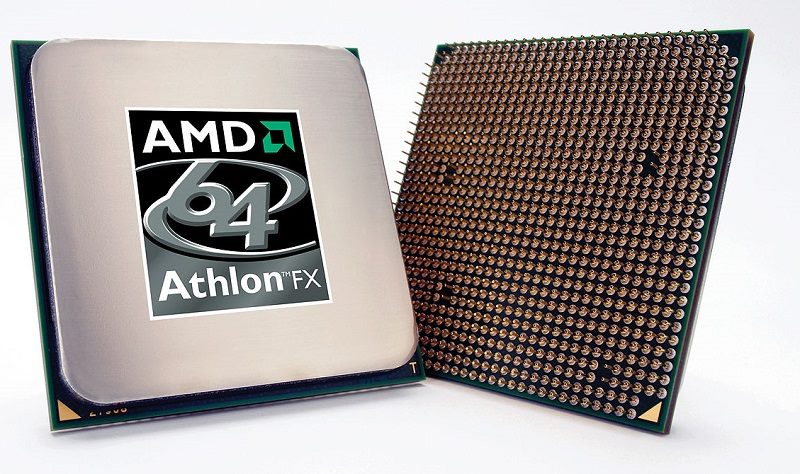

What do bits in a processor really mean?

When we talk about 32, 64, or 128-bit processors, we are referring to the size of data they can process simultaneously and the number of memory addresses they can handle.

A bit is the smallest unit of information in computing, representing a binary state: 0 or 1. An 8-bit processor can work with a maximum of 255 different values (2^8 - 1), while a 32-bit processor can handle up to 4,294,967,295 values64-bit processors can address astronomical figures, up to 18,446,744,073,709,551,615 values.

Now imagine a 128-bit processor, capable of addressing an almost unimaginable number: 340,282,366,920,938,463,463,374,607,431,768,211,455 valuesThis exponential leap explains the enormous capacity that this hardware could have, but also the great technical complexity that its development and use would entail.

What changes would a 128-bit Windows bring?

Visually, 128-bit Windows would be virtually identical to its 64-bit version. The key difference would be its ability to manage and process huge amounts of memory and data up to 128 bits per cycle.

In theory, it could handle up to 17,000 trillion yottabytes of data, an astronomical figure. To put it in context, a yottabyte is equivalent to 1,024 zettabytes, which in turn are 1,024 exabytes. The yottabyte represents the highest current unit in data measurement, comparable to the "light-year" in astronomy.

Furthermore, it would need a 128-bit processor to fully utilize its power, which would imply improvements in speed and security, especially useful in very specific jobs that require high amounts of processing per cycle, such as those that currently use AVX-512 instructions.

Why are there no 128-bit processors or Windows versions?

The main reason is simple: They are not needed yetAlthough technically possible, their development today would be impractical due to technical and economic limitations. Hardware would have to evolve enormously to manage RAM in petabytes or yottabytes.

Currently, most users use between 16 and 32 GB of RAMThe most advanced motherboards support up to 256 GB, while in professional environments configurations reach 2-6 TB per socket. In supercomputing, the exabyte limit was only recently surpassed.

Current processors already incorporate flexible designs, handling data larger than 64 bits in certain operations (such as AVX-512 in the Ryzen 9000, which processes 512 bits per cycle) without the need to change the entire architecture.

Furthermore, switching to a 128-bit system presents challenges such as incompatibilities, complexities in software and hardware support, and the need to rewrite numerous drivers, which implies a huge investment without clear benefits in the short or medium term.

Currently, no application requires a 128-bit operating system for general use. Fields such as scientific research or encryption use specific 128-bit operations, but these run on 64-bit systems using specialized libraries.

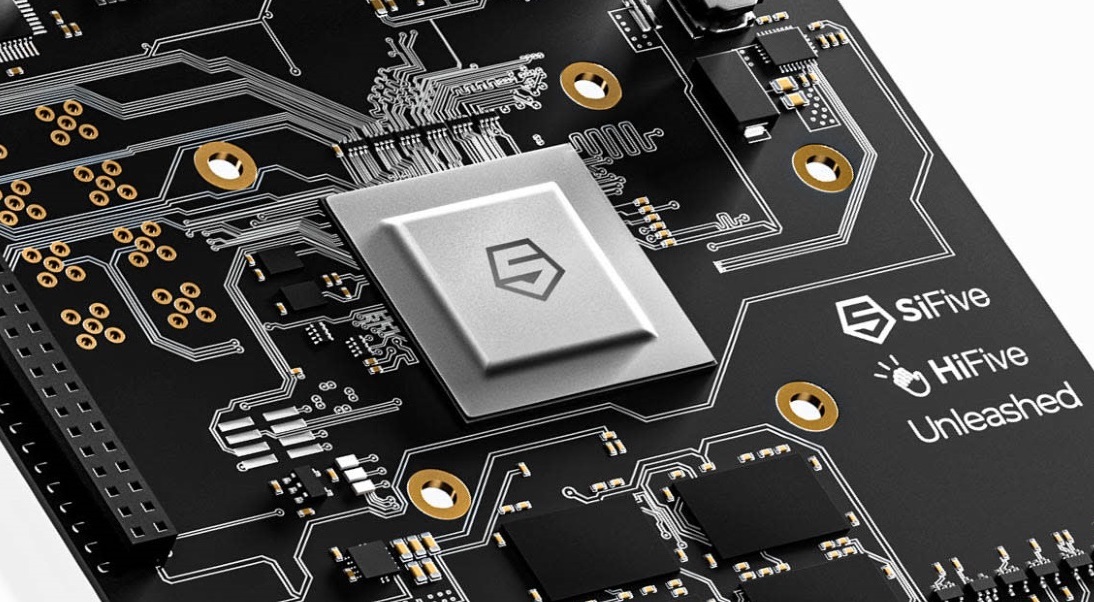

That doesn't mean we won't see Windows or 128-bit processors in the future. The RISC-V architecture already contemplates this possibility, but we're decades away. Computing has shown us that unthinkable advances can happen quickly, but for now, The transition to 128 bits remains on the distant horizon.

An interesting parallel: 20 years ago, nobody imagined computers with several gigabytes of RAM, and today it's the norm. Something similar could happen with bits in processors and operating systems, driven by an unexpected technological tipping point. ⏳

For now, the reality is that 64-bit operating systems and processors will continue to dominate for many decades.offering an optimal balance between performance, compatibility and cost.