Office Widgets on Mac: Open files in 1 click 🖥️⚡️

Good news! Microsoft and Apple have teamed up to launch Recent Files widgets on Mac, which means you can now open recent Office files with just one click. 🖱️✨

In June of this year, Microsoft launched a Recent Files widget for the Microsoft 365 app on iPhone and iPad, and two months later, it added widgets for Word, Excel and PowerPoint on Apple devices. Now, Microsoft and Apple have gone a step further by extending this functionality to Mac users. This means you can view and open your most recent Word files, Excel and PowerPoint directly from your Mac desktop. 🚀📂

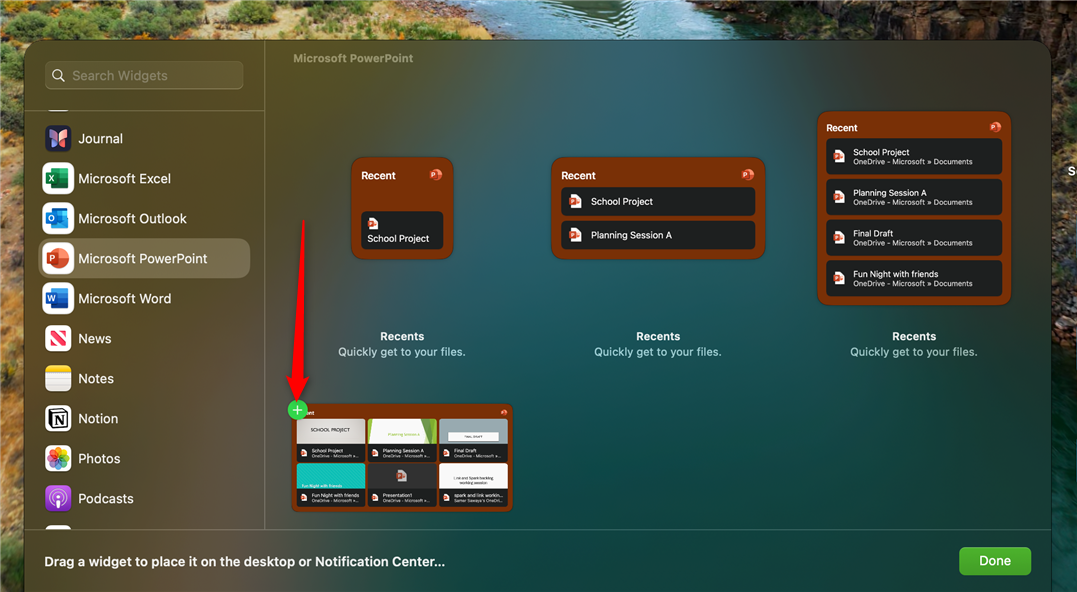

To take advantage of this new feature, do right click anywhere on your Mac desktop and select “Edit Widgets.” ✨

Then, you can write Word, Excel either PowerPoint in the search box at the top of the left panel, or simply scroll through the list of apps to find the one you need. 🔍📄

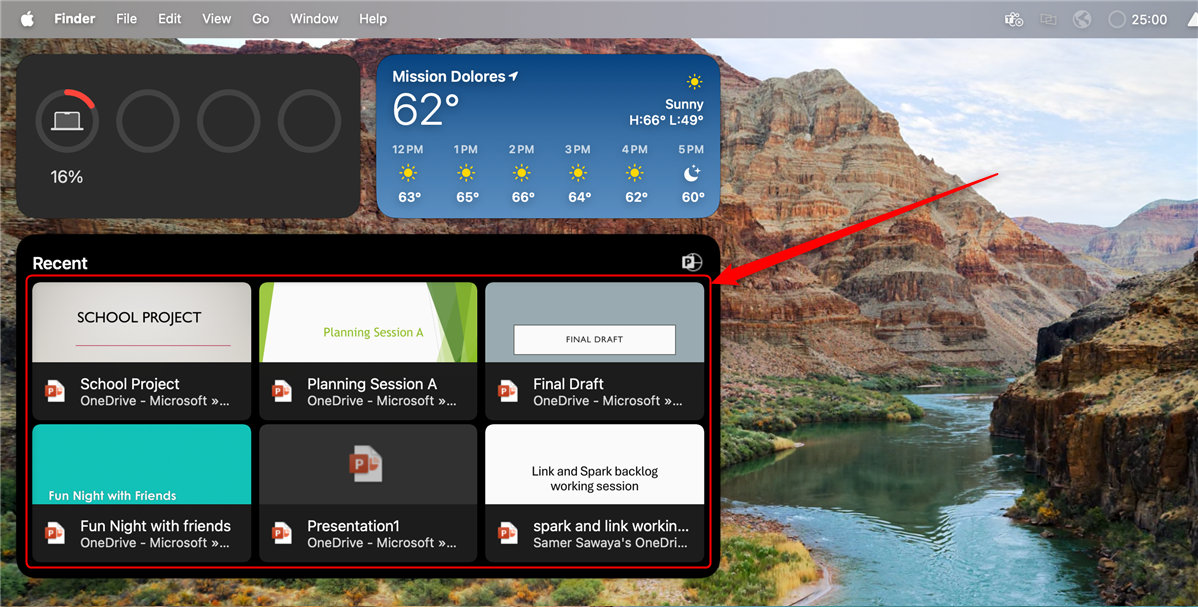

From there, you can choose whether you want a one-, two-, four-, or six-file widget by hovering over the corresponding size and clicking the green "+" icon in the top left corner. 🗂️✅

The Most recent files will appear within the widget on your desktop. Simply click on the corresponding file card to open it instantly. 📁💨

If you're not sure which file you want to open, the widget also lets you access the Word, Excel, or PowerPoint home page by clicking outside the file list within the widget. Widgets presumably also work from the Notification Center. 📬📊

While the Recent Files widgets for Word, Excel, and PowerPoint are now widely available for iPhone and iPad owners after a testing phase, the widgets for Mac are currently only accessible for Microsoft 365 Insiders using Version 16.91 (Build 24110320) or later. Since this is an extension of an existing feature, we anticipate it will roll out to all Mac users in the coming months, once any issues are resolved. 🕰️🔧