🌟 The Secrets of xAI Colossus: Discover Elon Musk's 100,000 GPU AI Cluster 🚀

If you're passionate about artificial intelligence and cutting-edge technology, you can't miss out on what Elon Musk is doing with his AI cluster. This tech giant, known as xAI Colossus, is making waves in the tech world. With a staggering 100,000 GPU processing power, this cluster is a true marvel of modern engineering. 🤖💻

In this article, we are going to unravel the secrets behind this amazing innovation. technological. We will explore how xAI Colossus is revolutionizing the field of artificial intelligence and what this means for the future. 🌟 Get ready for a fascinating journey into the heart of one of the greatest feats technological of our time. 🚀 Don't miss it!

Elon Musk's expensive new project, the xAI Colossus AI supercomputer, has been detailed for the first time. YouTuber ServeTheHome was given access to the Supermicro servers inside the 100,000 watt beast. GPU, showcasing various facets of this supercomputer. Musk's xAI Colossus supercluster has been online for nearly two months, following an assembly that took 122 days. 🔧💡

What's inside a 100,000 GPU cluster? 🤔

Patrick from ServeTheHome takes us on a tour with his camera through different parts of the server, offering a bird's-eye view of its operations. While some details More specific details about the supercomputer, such as its power consumption and the size of the bombs, could not be revealed due to a confidentiality agreement, xAI was responsible for blurring and censoring parts of the video before its release. 🎥

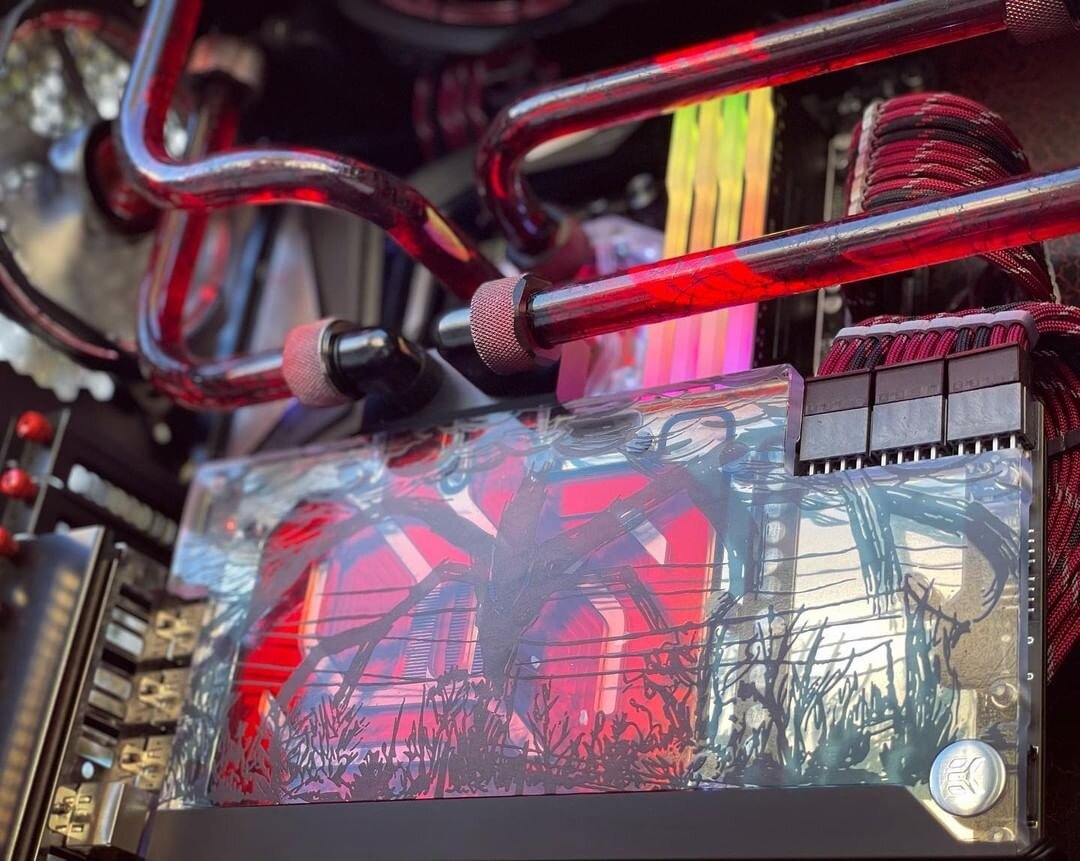

Despite this, the most important thing, such as Supermicro's GPU servers, remained virtually untouched in the footage. These GPU servers are Nvidia HGX H100, a powerful server solution featuring eight H100 GPUs each. 🚀 The HGX H100 platform is integrated within the 4U Universal GPU Liquid Crystal Display System Cooled from Supermicro, providing easily hot-swappable liquid cooling for each GPU. ❄️

These servers are organized into racks containing eight servers each, totaling 64 GPUs per rack. 1U manifolds are sandwiched between each HGX H100, providing the necessary liquid cooling for the servers. At the bottom of each rack, we find another 4U Supermicro unit, this time equipped with a redundant pump system and a rack monitoring system. 🔍

🖥️ Estos bastidores están organizados en grupos de ocho, lo que permite tener 512 GPU por matriz. Cada servidor está equipado con cuatro power supplies redundantes. En la parte posterior de los bastidores de GPU, se encuentran fuentes de alimentación trifásicas, conmutadores Ethernet y un colector del tamaño de un bastidor que proporciona toda la refrigeración líquida. 💧

There are over 1,500 GPU racks in the Colossus cluster, spread across nearly 200 rack sets. According to Jensen Huang, CEO of Colossus, Nvidia, the GPUs in these 200 dies were fully installed in just three weeks. 🚀

Since an AI supercluster that constantly trains models requires huge bandwidth, xAI went above and beyond in its network interconnectivity. Each graphic card 🔗 Features a dedicated 400GbE NIC (Network Interface Controller), with an additional 400Gb NIC per server. 🔗 This means that each HGX H100 server has 3.6 Terabits per second of Ethernet. Impressive, right? And yes, the entire cluster runs on Ethernet, rather than InfiniBand or other exotic connections that are standard in the supercomputing realm. 🌐

Sure, a supercomputer like the Grok 3 chatbot, which trains AI models, needs more than just GPUs to perform at its best. 🔥 While details about storage and CPU servers in Colossus are somewhat limited, thanks to Patrick's video and the blog post, we know that these servers are usually in Supermicro chassis. 🚀

NVMe-forward 1U servers with x86 platform CPUs inside are used, providing both storage and computing capacity. computing, and are equipped with liquid cooling at the rear. 💧 In addition, outside you can see banks of batteries Tesla Megapack very compact. ⚡️

The start-stop feature of the array, with its millisecond latency between banks, was too much for the conventional power grid or Musk's diesel generators. So several Tesla Megapacks (each with a capacity of 3.9 MWh) are used as an intermediate power source between the grid electrical and the supercomputer. 🖥️🔋 This ensures optimal and efficient operation, avoiding interruptions. 🚦✨

🌟 Using Colossus and Musk's stable supercomputer 🌟

The xAI supercomputer Colossus is currently, according to Nvidia, the largest AI supercomputer in the world. 🤯 While many of the world's leading supercomputers are used in research by contractors or academics to study weather patterns, diseases, or other complex tasks, Colossus has sole responsibility for training X's (formerly Twitter) various AI models. Most notably, Grok 3, Elon's "anti-woke" chatbot that's available only to X Premium subscribers. 🤖

Additionally, ServeTheHome was informed that Colossus is training AI models “of the future” – models whose uses and capabilities are supposedly beyond the current capabilities of AI. 🚀 The first phase of Colossus construction is complete and the cluster is fully operational, but it’s not all over yet. The Memphis supercomputer will soon be will update to double its GPU capacity, with an additional 50,000 H100 GPUs and 50,000 next-generation H200 GPUs. 🔥

This update It will also more than double its energy consumption, which is already too much for the 14 diesel generators Musk added to the site in July to handle. ⚡ While it is short of Musk's promise of 300,000 H200s inside Colossus, that could be part of Phase 3 of updates. 🔋

On the other hand, the 50,000 GPU Cortex supercomputer at Tesla's "Giga Texas" plant also belongs to a Musk company. Cortex is dedicated to training the technology Tesla's autonomous AI projects through camera streaming and image detection, as well as Tesla's autonomous robots and other AI projects. 🤖🚗

Additionally, Tesla will soon see the construction of the Dojo supercomputer in Buffalo, New York, a $500 million project coming soon. 💸 Meanwhile, industry speculators like Baidu CEO Robin Li predict that 99% of AI companies could collapse when the bubble bursts. Whether Musk's record spending on AI will backfire or pay off remains to be seen. ⏳